Basic Concepts in Device Development

Advanced Biostatistics for Translational Research

Authors

Chris Mullin and Roseann White

Chris Mullin

Roseann White

Roseann White

Statistical methods provide a formal set of mathematically based tools to facilitate scientific evaluation of data. Different stages of the translational research process require different tools, and similarly, different medical technologies may employ different tools at different times. Accordingly, some specialized statistical methods that were developed to evaluate and understand medical data are well known and accepted by clinicians and the research community. Examples of these include survival or time-to-event analyses like the Kaplan-Meier estimator.

As modern translational research is asked to solve ever more complicated and nuanced public health problems while overcoming increasing constraints on resources, novel methods provide chances to reduce the data burden of research and the time to market for new therapies. New methods should preserve favorable aspects of older methods while offering additional advantages. Ideally, new methods retain standards of validity, statistical efficiency, and freedom from bias while offering improvements in aspects such as statistical power, flexibility, or breadth of application. Accordingly, common concepts for assessing the value of statistical methods apply equally to methods both old and new. Additionally, advanced methodologies must be well understood in terms of their definition, operation, limitations, and any barriers inhibiting their uptake. This section will survey several advanced statistical methods particularly relevant for modern translational research.

General Considerations on the Choice of Novel Statistical Methods

When used successfully, statistics provides an efficient, concise, and scientifically accurate way of summarizing data and informing decision making. Less desirable uses of statistics include coming to premature or incorrect conclusions, obfuscating potential insights, and data-dredging aligned with pre-determined outcomes. Unfortunately, it is not always clear when one is using novel statistical methods for the former or the latter. New statistical methods create an extra level of potential confusion simply due to their novelty. To guide scientists and readers of scientific works in understanding how best to apply and interpret novel statistical methods, it is valuable to ask the following questions, none of which requires especially sophisticated statistical training:

— Do the novel methods address a practical problem?

Statisticians are constantly developing new methods based on a wide array of mathematical assumptions and optimality criteria. Understanding what precisely a new method offers over a more established method guides the comparison and choice of method.

— What problems does a novel method raise?

New methods will likely rely on a different set of assumptions than established methods. Are these assumptions reasonable? Are there other issues raised by this new method, including difficulty of use (i.e., does new software need to be developed, does the method require additional time, is it error prone), interpretation challenges, or unacceptable performance tradeoffs?

— For a particular study that employs a new method, was it prespecified?

An unsuccessful study may be subject to extensive reanalysis to try to salvage something of interest. This raises concerns of type I error inflation and selection bias. Studies that utilize prespecified methods guard against such issues.

— Were the study design and sample size based on the prespecified method of analysis?

If use of a novel method was prespecified, but the study design or sample size were not selected based on the corresponding method, there may be concerns regarding the validity, interpretation, or power. Simulation may be particularly useful in evaluating new methods when standard software or closed-form mathematical solutions to power calculations are not available.

— How do the operating characteristics (the power and type I error/false positive rate) of the novel method compare with traditional methods?

A comparison of the relative efficiency of methods can be helpful. Are the tradeoffs of increased power with a new method preferable, given other potential issues?

— Is there an urgent public health need that informs our assessment of the operating characteristics?

Occasionally, an urgent need may warrant less stringent assessments of statistical methods. If the amount of data to support a definitive conclusion cannot be collected in a reasonable period, and when the potential benefit/risk tradeoff for early versus later decision making is acceptable, more flexibility in statistical operating characteristics may be required. Perhaps a new method is less easy to apply and interpret but offers a considerable gain in power. For cases where there is a strong need to get a therapy into physicians’ hands, such tradeoffs may be appropriate.

Surrogate Endpoints

In cardiovascular trials, clinical outcomes of interest, such as mortality or major morbidity, may be rare in a particular target population. Rare events often require a large sample size to detect treatment effects of interest. If we study a treatment and see few events, is this due to the fundamental rarity of the event (and the role of chance) or the effectiveness of treatment? Use of a control group, in particular a randomized control, partially addresses this as the difference between a treatment and control is often interpreted as being due to the effectiveness of the treatment. However, even for a randomized control, understanding the role of chance versus the real magnitude of a treatment effect requires studies of substantial size. This can easily create a trial that is not logistically feasible. Feasibility can be framed in terms of numbers of subjects, time to obtain an answer, the definitiveness required for a particular answer, and financial requirements. A high bar for one of these may render an otherwise scientifically strong trial practically implausible. This concern can be mitigated if the therapy studied produces a large treatment effect, but large treatment effects are relatively rare in modern translational research, and smaller effects may still be of clinical interest. Additionally, important clinical endpoints may not even be measurable at crucial preclinical stages. Using earlier stage studies can inform clinical studies, but often only indirectly.

Surrogate outcomes are one solution to these problems. Surrogates shift the focus from the underlying clinical outcome to interest in an outcome that is easier to measure or requires a more realistic sample size. A shift to a surrogate can be made by evaluation of a more easily measured outcome (such as a biomarker) or to the same outcome at an earlier time point (e.g., 1 month instead of 12 months – the later time point may be of primary clinical interest since an early effect may be a transient effect). Broadly, any measure used as a substitute for the clinical outcome of interest is a surrogate outcome. To the extent that a candidate surrogate outcome “captures” all the information regarding the treatment effect on the outcome of interest, it would make a good surrogate. This idea was formalized as criteria for valid surrogate endpoints in clinical trials (1). Informally, correlation of a surrogate endpoint with a clinical outcome is thought to establish validity of a surrogate. However, the formal criteria are substantially more rigorous, and in fact, even for a surrogate that satisfies the criteria, a significant treatment effect on a surrogate endpoint does not necessarily imply a treatment effect on the clinical outcome of interest (2). Practically, a number of surrogate endpoints in the cardiovascular space have demonstrated value despite their limitations. These include blood pressure and various lipid measurements, in the appropriate patient populations.

With new technologies, there is an obvious appeal to using established endpoints, especially established surrogate endpoints. This provides multiple benefits: previous arguments about the appropriateness of the definition may be relevant; operational aspects may be well understood and applied; and, in the end, comparisons to previous studies may be leveraged. However, new technologies often have novel aspects that motived their development. These novel aspects may not be readily addressed by standard endpoints; worse, it is possible that standard endpoints will obscure new benefits. A new endpoint may be viewed with suspicion and cast as a surrogate for more traditional endpoints. However, this may be simply due to lack of familiarity with the new benefits and new endpoints.

Substantial work may be required to gain acceptance for such new measures, including establishing validity of various types. Pilot testing novel endpoints in first-in-human (FIH) or early feasibility studies (EFS) may be possible. This can help detect serious issues with the definition or data collection process. For example, a new surrogate endpoint based on blood collection may be proposed. If there is a problem in a feasibility study with regard to sample collection, mitigation efforts may be explored and then fully implemented in a subsequent trial. Without such pilot testing, the value of the new surrogate in a pivotal trial may be severely limited due to missing data. Novel endpoints may require substantial additional effort outside the typical pivotal clinical trial used for regulatory approval or reimbursement purposes.

Composite Endpoints

Another solution to the problem of limited power for rare events or small treatment effect is the use of a composite endpoint. A composite endpoint consists of a single endpoint defined to capture multiple outcomes. A canonical example is the use of “major adverse cardiovascular events” (MACE). Multiple therapeutic areas and products have used an endpoint along these lines. Of note, there are often variations of the particular events that constitute the components of MACE endpoints, so readers of clinical studies should closely examine the definition of any particular use of the acronym in a given trial. The particular choice of components should be based on a consideration of the disease, the study population, and the treatment under study. The components should be clinically relevant and be expected to be modified by the treatment.

Clinical relevance is a matter of degree. In an ideal situation, there would be universal agreement that all components of a composite are of equal importance so that no matter the distribution of events among subcomponents, the overall composite endpoint provides a simple summary measure to compare the relative performance of the therapy. Since this is rarely possible, obtaining consensus that the components of a composite are relatively comparable will help ensure acceptability of a composite. A solid clinical rationale for the components of a composite endpoint is necessary. Further, inclusion of components that do not reflect the treatment effect can dilute the results by adding noise. Conversely, a composite endpoint that only includes components to increase statistical power and excludes important clinical events can exacerbate problems of interpretation.

While a composite endpoint may provide increased statistical power, interpretation can be challenging. For example, if the overall composite endpoint shows a statistical difference favoring the treatment group, but certain clinically important components of the composite (e.g., death) numerically favor the control group, the value of the composite may be questionable. The role of chance that is at play for a single endpoint can be even more complicated for a composite, as each component is also subject to the role of chance and trials are often not designed to provide a high degree of statistical power for any individual component. Thus, challenges will be greatest for the least frequently occurring components. In a modestly sized trial, a small treatment effect may produce discrepant results amongst the components of a composite endpoint. This happens precisely when composite endpoints may be of most interest: the case of small treatment effects that require large studies.

For a new technology, there are special challenges in applying a composite previously studied for other technologies. The new treatment may differentially affect aspects of the component or it may affect other clinical outcomes of interest that have not traditionally been incorporated into the composite endpoint. These issues will vary based on the similarities or differences of the technologies. The tension between clinical relevance and interpretation and statistical validity and power considerations may be considerable and not easily resolved. A thorough evaluation and discussion by all relevant parties — clinicians, regulators, statisticians, etc. — is required.

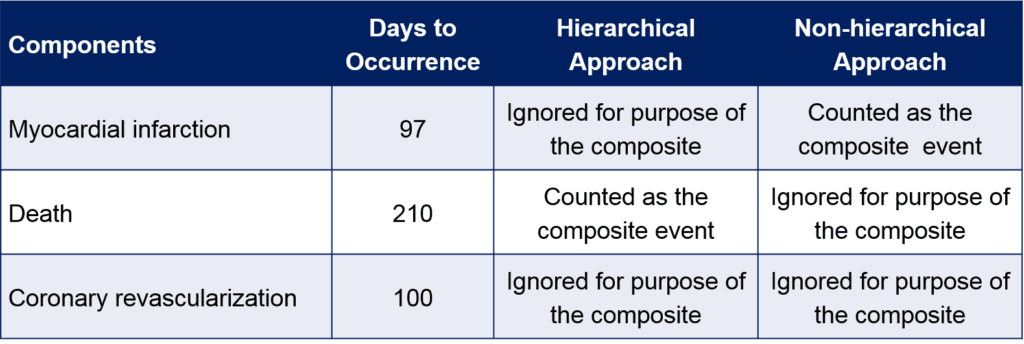

There are several approaches for handling a composite endpoint that are not always clearly stated or understood. One involves counting the first occurrence of any of the components of the composite. This is frequently the case in a time-to-event analysis where the analysis is based on both the occurrence and timing of an event. Simply counting the first occurring component is then a natural consequence. In summarizing the composite, the number of occurrences of each component will equal the total number of occurrences of the composite (i.e., the sum of the first event among components equals the sum of all the first events for the composite). An alternative approach, sometimes termed a “hierarchical composite,” is based on counting the most severe component of the composite when decomposing the composite (Table 1). For example, for a MACE endpoint that includes the components of myocardial infarction and death, in a hierarchical approach, a patient who experienced both events would be counted as a death. Such an approach may be preferred when the primary analysis is based on occurrence of events at fixed point in time (e.g., 12 months) as opposed to a time to event approach.

Table 1. Illustration of Approaches for Summarizing Composite Endpoints – Hypothetical Patient Data

In either case, assessments of the components of the endpoints, as well as assessments of potential multiple occurrences of the same component in a subject, are important as they can alter the interpretation of the study. To avoid over simplification and possible misinterpretation, any study that employs a composite endpoint should include analyses of the individual components while recognizing the potential limitations of such analyses (i.e., decreased power for components, type I error inflation due to multiple testing, competing risk, etc.). This is true regardless of the statistical methods used for analysis.

Advanced Statistical Methods for Composite Endpoints

To overcome some of the difficulties with composite endpoints, there are several sophisticated statistical approaches to analysis. Generally, these methods attempt to weight or rank the components to enable a global assessment that accounts for varying degrees of importance. These methods are amenable to cases where there is a clear ranking among the components.

Work on analyzing multiple separate endpoints helped trigger more sophisticated approaches to composite endpoints (3). Early work applied nonparametric rank approaches to multiple endpoints in an attempt to address many of the problems associated with composite endpoints. A later paper by Finkelstein and Schoenfeld took the approach of explicitly forming pairwise comparisons of trial subjects and assessing each outcome in a ranked fashion (4). It should be noted that the paper by Finklestein and Schoenfeld did include an example of a recurrent event (e.g., infection); this addresses another challenge of composite endpoints, that of multiple occurrences of an event within a subject. To illustrate their method, take two subjects in a study, one receiving the experimental treatment and the other a control. If the treatment subject dies before the control, the treatment “loses.” If two subjects do not die during the study, they “tie” for the outcome of death, but are then compared on an additional less important outcome. Thus, for each component of a composite, one can determine the “win” or “lose” status for the experimental treatment by systematically comparing the outcomes of pairs of subjects based on the ranked importance of their outcomes. This is analogous to the child’s card game of “War” – in the event that a hand of cards is tied (say both players lay down a queen), subsequent cards are played to determine the winner of the hand. The process assumes the occurrence of the highest-ranked outcome trumps all other outcomes.

This simplifies the analysis and partially addresses past concerns with composite endpoints, but several issues remain. First, one must have a definitive ordering of clinical importance. This is not always possible, or without controversy for a particular chosen ranking. As a related example, imagine a composite of death, stroke, hospitalization, and revascularization where the components are ranked in the order listed from most to least important. A subject who survives the study but with multiple occurrences of debilitating stroke, prolonged hospitalization, and revascularization will be considered a “winner” relative to a subject who dies and remained free from stroke, hospitalization, and revascularization. The specific comparison of quality of life or the health economics of two such patients may not be as clear. Further, under certain cases, a hospitalization may be more or less of a concern than a stroke depending on the duration and reason for hospitalization, and on the severity of the stroke. Such issues put the relative ranking of these components in question. This may be partially addressed by defining more nuanced components (e.g., disabling stroke based on a modified Rankin score), but this may adversely affect the power by lowering the incidence rate. Clearly, no one method can anticipate or address every possible scenario or concern.

The idea of forming pairwise comparisons for multiple endpoints was extended to include a measure of effect size, that is, the ‘win ratio’ (5). Take the “winner” to be a matched pair where the experimental treatment subject has the better outcome and the “loser” to be a pair where the experimental treatment subject has a worse outcome. The win ratio is the total number of winners over the total number of losers, allowing a simple expression of the relative performance of the treatment on the composite endpoint, accounting for the components’ relative clinical importance. This paper also used risk matching to improve performance in cases where strong prognostic factors could be leveraged for more sophisticated matching. Risk matching in this case is akin to covariate adjustment in standard linear models, and similar to linear models when there is a strong argument to be made for adjustment, it can provide an increase in power. When adjustment is controversial or not possible, unadjusted/unmatched analyses are still appropriate.

Multiple Testing

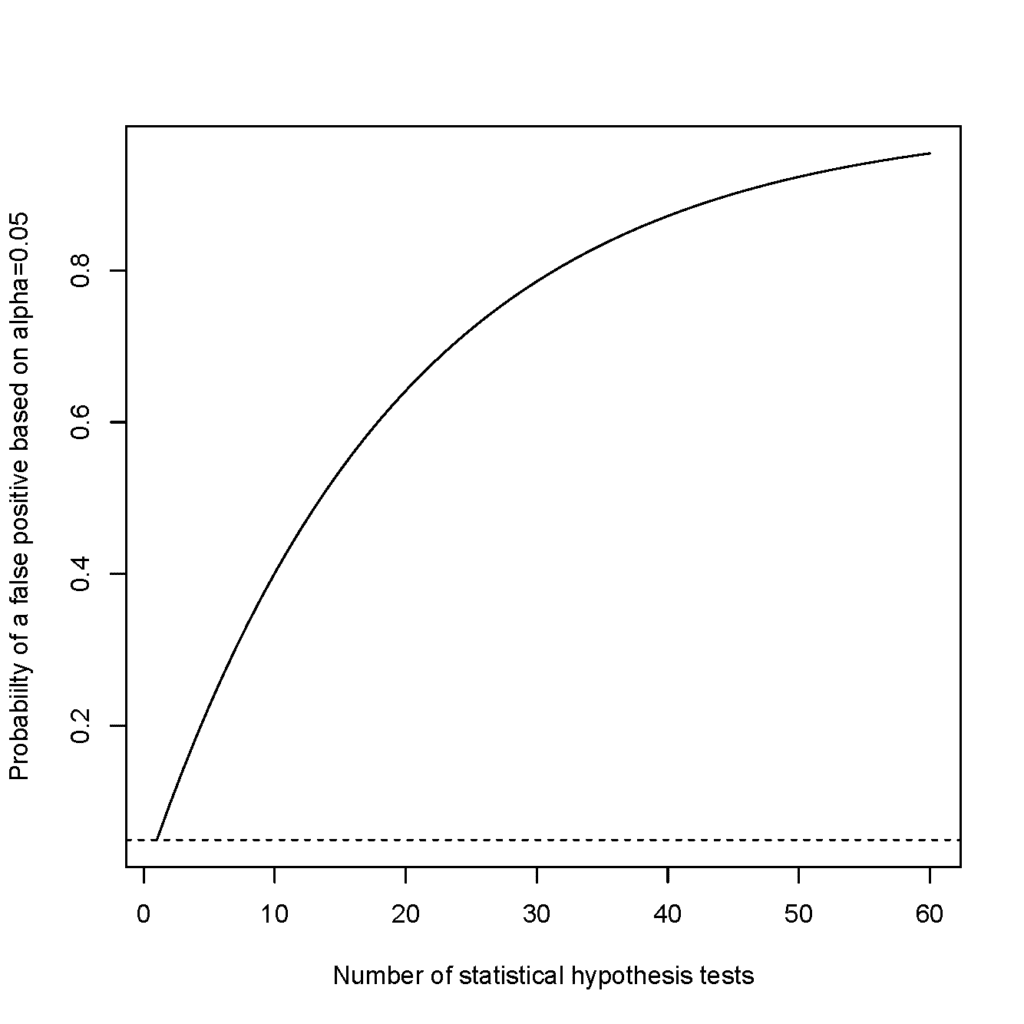

The primary objective of a study drives the overall trial design. Often, a primary endpoint or coprimary endpoints directly assesses the primary objective. Additional endpoints may be used to support the primary endpoint, to address secondary questions of interest, or to generate novel data for the purposes of hypothesis generation and future study. If each endpoint in a study is assessed with a statistical hypothesis test at a specific alpha level test, the overall chance of one or more statistically significant findings in the study is increased. If endpoints in a study are independent, the probability of a false positive eventually approaches 1 (Figure 1). If each test is performed at an 0.05 alpha level, the false-positive rate for a study with 10 tests already exceeds 40%. Clearly, a study with multiple endpoints requires careful thought and planning on how to handle multiplicity.

Figure 1. Probability of a False Positive

If each endpoint in a study is assessed with a statistical hypothesis test at a specific alpha level test, the overall chance of one or more statistically significant findings in the study is increased. If endpoints in a study are independent, the probability of a false positive eventually approaches 1. (Figure courtesy of Chris Mullin.)

Methods for controlling type I error need to be defined in advance. Post hoc adjustments are challenging as knowledge of the results may bias choices, including the number of tests to be controlled, as well as the particular method for control.

The simplest correction, the Bonferroni method, is to divide the alpha level by the number of planned tests. This method is very conservative, and modern alternatives have been developed to provide more power. These include step-down and step-up methods that are based on adjusting p values based their ranking. Another practical approach is to formulate a “gatekeeping” strategy where by endpoints are ordered and performed only until a nonsignificant result is achieved. This is a simple method that requires no sophisticated calculation but relies on a good ordering for the endpoints. An endpoint with low power that is placed too early in the hierarchy would serve as a “gatekeeper” and prevent other endpoints from being deemed significant. Strategies for controlling type I error in multiple testing scenarios should consider the tradeoffs of statistical power, hypotheses of varying clinical or scientific interest, and the desire for formal statistical conclusions.

There is no purely statistical reason for always selecting an alpha level of 0.05 in a statistical hypothesis test. This value was long ago deemed sufficiently conservative in terms of controlling the type I error rate and is retained in part because of tradition. However, when one considers the range of disease states, technologies, and areas of scientific interest where a statistical threshold may be applied, a more nuanced view is appropriate. For example, in particle physics, evidence of the Higgs boson was based on an extraordinary p value of 0.0000003 (6). In an area of scientific discovery where experimental processes are easily repeatable, and the theory and methods are extremely developed, such a tight threshold may be appropriate. In other areas, where there is a strong public health need but data are hard to come, a less stringent requirement may be appropriate (7). For endpoints that are exploratory in nature, it may be appropriate to use a nominal or unadjusted alpha level test, since firm conclusions will not be made on the nature of a single study but would be candidates for future study and confirmation.

Missing Data

The landmark report by the National Academies on the prevention and treatment of missing data in clinical trials brought the importance of the topic of missing data to the fore (8). Missing data has long been recognized as a problem in clinical trials, but often the focus was on the loss of power. Increasing the sample size of a study to account for the expected degree of missing data mitigates this power loss. However, the National Academies report emphasized that a more fundamental concern regarding missing data was that of bias. Briefly, if missing data in a trial are related to the likelihood of a good or bad outcome, the problem is more than just a loss of power. In a trial where subjects who are not doing well are more likely to drop out or become lost to follow-up, an analysis that is restricted to those subjects with complete data will be overly optimistic. Conversely, in a trial in which subjects treated effectively in the short term may drop out because of burdensome clinical trial assessments, those remaining may only be those not doing well and hence provide a pessimistic view of the treatment. Either case can produce a biased treatment effect that could differ had the drop-out subjects continued to the trial’s end. Complete data are required for a true intent-to-treat approach for clinical trials.

The best solution to the problem of missing data, like many problems in public health and medicine, is prevention. Steps taken to minimize the amount of missing data in a trial are essential and provide the best solution. A variety of statistical methods exist for handling missing data, and research on improved methods continues, but all methods in the end rely on assumptions that are often untestable and unverifiable. Accordingly, the best approach is often multi-pronged: taking steps to minimize missing data; prespecifying a primary method for handling missing data; and prespecifying a variety of sensitivity analyses to assess the impact of missing data.

There are three analytical approaches to handling missing data: analyzing only complete data, discarding incomplete cases; analyzing available data, including incomplete cases; and imputing values for missing data. The first approach is usually inappropriate due to reliance on strong assumptions about missing data and the potential for bias when assumptions are not met. Analyzing available data is possible with many common software packages and is valid under less restrictive assumptions. Imputation involves introducing values for missing data – precisely how this is done has a strong impact on the validity of results.

It is useful to classify missing data as “missing completely at random” (MCAR), “missing at random” (MAR), and “missing not at random” (MNAR). This classification helps guide the choice of primary analyses and sensitivity analyses. MCAR data are those that are truly missing at random – that is, that fact that data are missing is completely unrelated to any variable in a study and is therefore unpredictable. MCAR data rarely occur in practice. As a plausible example, if a random subset of study participants neglected to complete one page of a baseline case report form, such data would be MCAR. This is because the fact that the data are missing is unpredictable (i.e., it can’t be determined who has missing data) and the data being missing is unrelated to their outcome (the fact that they neglected to complete it is not related to their outcome under the treatment).

MAR data are more common and easily accommodated by many statistical methods. Data are MAR if the missing data can be fully accounted by other variables for which there is complete information. Continuing with the case report form example, if it were only male subjects who neglected to complete the page of the case report form, it could be predicted who had missing data, but the fact that data were missing would still be unrelated to the subjects’ outcomes.

Finally, data that are MNAR means that the data that are missing are indeed related to the outcome. Such a case could occur if a subject did not complete the page of a case report form at a follow-up visit because they were too sick. Clearly, for this case something more sophisticated must be done in analysis to account for the potential association of missingness with outcome. Assessing whether data are MCAR, MAR, and MNAR is usually much more challenging in practice and in some cases impossible. In practice, most trials employ a primary analysis method that assumes MAR and provides for sensitivity analyses that assume MNAR.

A variety of methods for handling missing data are used in pivotal clinical trials to support U.S. Food and Drug Administration (FDA) marketing applications. Historically, many trials used a “last observation carried forward” (LOCF) imputation: for a subject who was missing the primary endpoint evaluation, the last observed data point was used in its place. This tended to both underestimate the variability, since it is often extremely unlikely that subject would have the same measurement at two different time points, as well as ignore any time varying treatment effect. Continued use of LOCF imputation is presumably for historical comparisons to past trials. More common modern (and more statistically appropriate) approaches include likelihood-based methods, multiple imputation, and sensitivity analyses.

Likelihood-based methods underlie many common statistical tools. These include linear regression, logistic regression, and proportional hazards models. Likelihood-based methods posit a parametric model for the distribution of the data and inference is based on the likelihood function given the data. Usually valid under an MAR assumption, these methods hold advantages that include their familiarity and generally well understood statistical properties.

Multiple imputation attempts to solve the problem of variability underestimation by producing a range of plausible values for imputation and appropriately averaging over those values to produce valid estimates and statistical summaries. Essentially, one creates a model to impute missing data based on the available data. Subjects missing an outcome variable can be compared to subjects with similar characteristics who are not missing the outcome variable. This comparison facilitates simulating multiple data sets with imputed outcomes to reflect the appropriate degree of variability in the outcome variable. The multiple data sets are then combined to produce appropriate estimates, confidence intervals, and p values.

Two other sets of methods for dealing with MNAR data include selection models and pattern-mixture models. These and other methods, such as inverse probability weighting, have not been as commonly used in medical device trials due to combinations of a lack of good precedents, the need to make strong assumptions, complexity, and a lack of standard software to aid implementation. However, it is likely that these methods will play a larger role in future trials as ambitions grow regarding handling missing data, software improves, and precedents arise. Briefly, selection and pattern-mixture models factor the full distribution of data into components related to the missing and the observed components. Different sets of assumptions are made regarding the missing data mechanism to facilitate imputation. Unfortunately, many of these assumptions are unwieldy and suffer again from being untestable and unverifiable.

A more practically motivated and more commonly used approach to sensitivity analyses for missing data is a tipping-point analysis. This analysis assesses all possible combinations of outcomes for missing data amongst the treatment and control subjects. The “tipping point” is defined as the combination of imputed values that “tips” the results from significant to nonsignificant (or perhaps, estimates from favorable to non-favorable). More broadly, this method provides a range of estimates and p values from highly conservative (e.g., all missing outcomes for treatment subjects are negative, all missing outcomes for control subjects are positive) to highly anti-conservative assumptions regarding missing data, allowing an assessment of sensitivity to different patterns of missing data (note that the extremes represent MNAR scenarios). This approach is somewhat ad hoc as it makes no differentiation between the likelihood of different scenarios. Additional information or analyses, in particular reasons for missing data or a comparison of baseline risk factors for those with/without missing data, may provide insights into the likelihood that data are MNAR. Other approaches based on statistical modeling, like multiple imputation, provide more focused results and attempt to model likely scenarios. The caveat is that such models again are based on untestable assumptions. They are likely to be accepted when the mechanism of missingness is considered understood and amenable to modeling.

Historical Controls

While randomized controlled trials are considered the “gold standard” for clinical research, other methods may be required in certain situations, and with appropriate controls, these may still provide a high degree of scientific evidence (9). Two common methods for comparing prospective data against historical data include the formulation of performance goals and propensity score matching. The former derives a “line in the sand” that is used to test a new therapy. The latter attempts to control for potential confounders to “clean” the comparison of historical and prospective data from relevant biases.

Objective Performance Criteria and Performance Goals

A canonical example of the use of historical controls is that of the objective performance criteria (OPCs) for mechanical cardiac valves. A large database of experiences with mechanical valves was used to derive expectations around particular events of interest. Prospective single-arm studies of a new valve are compared against the rates derived from these historical data. This approach was deemed appropriate in part due to the simplicity of the design and mechanism of action of mechanical valves. Additionally, when an effective therapy is available, clinicians and physicians may be reluctant to participate in a randomized trial due to lack of equipoise (i.e., receiving a valve is almost surely better than not receiving a valve).

More common and recent experiences with the use of historical controls center around the use of performance goals. Mathematically and statistically, these are essentially the same as OPCs. However, a performance goal is used when the historical data are not deemed sufficient to be considered “objective,” which informally means that they are less well established in the literature than historical controls where an OPC exists. In the United States, regulators tend to avoid using comparative words when discussing performance goals; rather than stating that the performance of a device is “significantly better” or even “noninferior” to a performance goal, the preferred verbiage is that the performance goal is “met” or “not met.” Again, the underlying statistics are the same.

An evaluation of the appropriateness of historical control groups depends on a thorough assessment and favorable judgment regarding the applicability of the data. This usually incorporates multiple perspectives, including engineering/design perspectives, biocompatibility, preclinical, and clinical.

Propensity Score Methods

A step up from an OPC or performance goal is a historical control group based on patient-level data. This facilitates a comparison that accounts for potential differences between the historical control group and prospective data. Adjustments to the nonrandomized groups help to achieve an appropriate comparison that is balanced for confounders. Propensity scores offer a more modern version of a covariate analysis or “multivariable adjustment.”

Generally, propensity score methods are based on a two-step approach. First, covariates (i.e., predictors or confounder variables) are used as a group to predict whether a patient is likely to be in the treatment or the control group. Note this contrasts with a classical multivariable approach where the covariates, including one for the treatment group, are used to directly predict the outcome. In propensity score analysis, the probability of being in one group or another based on the set of covariates is the “propensity score” (i.e., the propensity for a subject to receive treatment). Propensity scores are then used for either stratification, matching, covariate adjustment, or weighting, to provide the adjustment. Using the propensity score to provide adjustment for confounding, rather than trying to use multiple covariates at the same time, is the main advantage of this approach over a classical multivariable adjustment. However, like multivariable analyses, the ability of propensity score methods to mitigate bias depends on how well the covariates included in the model capture the confounding variables, and how well balance or adjustment is achieved. Statistical adjustments are only as good as the confounders that are included in the modeling. A model that omits a key confounder may not provide the bias control necessary to support robust conclusions. In contrast, randomization on average produces a balance of all possible confounders, both measured and unmeasured.

Unfortunately, propensity score methods are not free from potential regulatory issues. Covariate adjustment via propensity scores has been criticized on the grounds of possible analysis bias – formulating a proper covariate adjusted model while there is visibility to the outcome of interest could be seen as creating perverse incentives for modeling choices. Matching based on propensity scores can also be problematic. For example, if prospective data are gathered on subjects treated with a new device, it may be challenging to find an appropriate match for all subjects. If unmatched subjects are omitted from the analysis, then describing the population studied and the intended use population if the device is approved is challenging. Alternatively, if matching criteria are relaxed to ensure all subjects are matched, the ability of matching to reduce bias is compromised. Stratification can suffer from a similar issue. While stratification on the propensity score allows assessment of overlap of the treatment and control group across the strata formed by propensity scores, a lack of overlap can create a problem similar to that of matching. Despite this, propensity score adjustment via stratification has been used to support several regulatory filings.

Data Aggregation

The experience of a device used on a single patient is usually considered anecdotal information. While it has been stated that “the plural of anecdote is not data,” data from multiple individuals are combined, or pooled, to provide a more complete and representative picture of how the device is expected to perform. More formally, a defined protocol for data collection and analysis provides the rigor needed to turn information gathered from multiple individuals into scientific data or evidence. Appropriate conditions under which to pool data from individuals, from investigational centers, or from separate clinical studies requires careful thought and planning.

Simple Pooling

The U.S. FDA frequently raises questions about “pooling” of data in multicenter trials. These concerns focus on whether or not it is appropriate to plan to combine data from different investigational centers. Such plans are appropriate when a common protocol with defined objective inclusion/exclusion criteria is used and applied equally to all centers, including applying operational aspects as well as training. Such practices are standard for modern clinical trials. One of the strengths of a multicenter trial is the greater generalizability it affords. Rather than studying a somewhat homogenous set of patients from one center (where participants in a trial may be homogenous with respect to demographic characteristic) with a single treating physician (where the physician might be the world’s leading expert on the use of the investigational device), a multicenter trial, with a broader sampling of patients and a larger number of treating physicians, provides a more realistic data set that is also more likely to mirror the real-world experience with the device.

If it is appropriate to plan to combine data, questions may still arise if results vary across centers. Variation in outcomes between centers is relatively common in device studies (in contrast to pharmaceutical studies) due to the role of skilled operators in the delivery of many device therapies. Specific analyses, such as an analysis of a “learning curve,” can be performed to help understand the role of physician experience. Other sources of variation may include different patient characteristics (e.g., different race, ethnic, or socioeconomic makeups of the population served by a clinic) or differences in treatment practices (such as variations in standard of care, concomitant medications, or testing/screening procedures). Heterogeneity between subjects from different centers, to the extent it derives from particular clinically relevant characteristics, may be best understood in the context of subgroup analyses as this allows for a more specific and relevant attribution to a modifiable and hopefully biologically plausible mechanism. Confounding risk factors may help explain variation that is seemingly due to sites. This can be facilitated via methods of stratification, covariate adjustment, or exploration of effect modification by examining interactions of risk factors.

Occasionally, there may be variation between sites not explained by other factors. In such cases, there is often a desire to quantify the treatment effect with a “random effects” model that allows for extra variation between sites in the estimation process. Such models essentially treat the centers in a study as an extra source of variation, beyond the normal variation attributed to subjects. The unexplained variation is then accounted for to some extent in the understanding of the overall treatment effect. This added variation can create wider confidence intervals, reflecting the added uncertainty due to the unexplained variation.

Hierarchical Models

Random effects models are an example of a hierarchal model. Such models take into account a hierarchal structure of data, such as considering subjects within sites or sites within studies, to facilitate inference. This can be extended to considering a structure amongst studies, specifically considering one study as a sample from a larger population of hypothetical studies. Hierarchical models involve complexities that other models do not because of their structure. The methods may be most familiar in the context of a meta-analysis. Before discussing practical aspects of hierarchal models, a brief discussion of how the hierarchical structure relates to the concepts of independence, correlation, and exchangeability is needed.

Clinical study participants are usually considered as independent observations, meaning there is no reason to think the data of one subject is correlated with the data of another. By analogy, consider flipping two identical coins, which would likely be considered independent for the purposes of flipping a “heads” or “tails.” If 10 flips produce 2 “heads” and 8 “tails,” you likely don’t care whether one coin or the other was used for any of the 10 flips. However, if one of the coins is bent in such a way that it is more likely to land on tails, knowing which coin was used for the 10 flips becomes important. In the latter case, we induced some “within-coin” correlation, and accounting for this becomes important in interpreting results. A practical clinical case where an assumption of independence would be inappropriate would be a study of twins. Here, one would expect correlation of data from a twin pair; if we know the serum cholesterol of one twin, we may have a better guess of the value for the twin, but we have likely learned nothing about the value for any other subject in the study. Accounting for correlation when present is important for the validity of statistical analyses.

Exchangeability is a concept closely related to that of independence of observations. Exchangeability means that subjects can be treated as interchangeable in terms of their ordering of enrolling in a trial; that is, the order in which a subject was enrolled in the trial does not provide information on their outcome. The technical differences between exchangeability and independence are mathematically complex (10). In approximate terms, a sequence of observations that is independent is exchangeable, but the converse is not true; an exchangeable sequence of observations may not be independent. Discomfort with the concept of exchangeability may be alleviated by this realization: investigators are often comfortable with assuming independence, and exchangeability is a less stringent assumption. In a similar fashion, exchangeability does not mean that observations (be they subjects or studies) are identical to each other. It simply means there is no a priori way based on their characteristics to predict their outcomes.

Exchangeability also may be considered conditional on information. Returning to the example of twins, the twins may be considered to be exchangeable once it is known (or conditioned on the fact that) they are related. Beyond knowing they are related to each other and therefore more similar, the twins’ other characteristics related to their outcome remain unknown. When such information exists, it can be used via statistical adjustment to produce exchangeable data.

The U.S. FDA guidance on the use of Bayesian statistics notes three practical perspectives to help assess exchangeability. These include assessing whether studies have a similar design and execution, whether the devices under study have a similar design and manufacturing process, and finally, whether any differences of concern can be accounted for in statistical modeling.

The concepts of independence and exchangeability can also be applied at the study level and are important to consider when attempting to aggregate data from multiple studies. If particular studies are considered a sample from a larger population of hypothetical studies, they may be considered in a hierarchical framework. This is the perspective taken by most meta-analyses. In these cases, multiple studies of a therapy are combined in the hopes of producing a more robust and definitive conclusion. While a meta-analysis is usually restricted to examining one particular therapy in a relatively well-defined population, it should be noted that the studies included may have relatively large differences in design or execution. Rather than taken as an impediment to including such studies, the focus of an overall treatment effect can frame variation between studies either as a nuisance factor that is handled analytically, or as a benefit in that a strong treatment effect in the presence of a degree of between-study heterogeneity helps demonstrate robustness of the treatment.

Bayesian Methods

There are two primary schools of thought regarding the definition of probability. One, the frequentist paradigm, considers probability as a long run average. The other, the Bayesian paradigm, considers probability as point of view that encapsulates our existing knowledge and data. These two different interpretations of probability lead to different mathematical constructions. While the bulk of traditional statistical tools were developed under the frequentist paradigm, use and acceptance of Bayesian methods have grown recently, in large part due to their ability to incorporate additional knowledge and data.

Bayesian methods consist of a somewhat broad set of tools for statistical inference. They share a common foundation based on their use of a “prior” distribution. A prior distribution is simply information already in hand prior to collecting data, information that can be formally expressed in a mathematical framework. Theoretically, a prior distribution many come from many possible sources, such as subjective opinion, expert opinion, or some external or historical data set. Combining the prior distribution and data and a statistical model (the likelihood) provides for Bayesian statistical inference. Great care is required in selecting the source of prior information, assessing the validity of prior information, and determining precisely how to combine prior information with prospective data.

Use of prior information can provide more statistical precision for estimating treatment effects. However, one must always ask: is the selected prior appropriate to combine with data? Using prior information that is not appropriate may raise questions of interpretability. Additionally, even an appropriate prior can inflate the chance of a “false positive.” This is of concern since minimizing the chance of a false-positive finding is a key concern of regulatory agencies. This issue is a barrier to more widespread use of Bayesian methods. Common frequentist methods often have relatively simple formulas for assessing sample size and power while constraining the type I error rate (a frequentist measure of a false-positive rate), but this is not possible with many Bayesian approaches. Fortunately, ever increasing computing power and sophisticated statistical software facilitate simulations that can be used to assess false-positive rates of Bayesian methods. Simulation approaches also carry over to more complex frequentist analyses or studies where simple formulas do not exist.

Subjective and expert opinion as a source of prior information have not seen widespread uptake by regulatory authorities. This is not terribly surprising given the variety of opinions of experts and the potential for conflicts of interest to taint such opinions. Specifically, while a clinician expert may have strong reasons for optimism for a new therapy, a regulator – who is another type of expert – may have strong reasons to be pessimistic, perhaps based on information they have access to that is not available to the wider public. There is often no clear way to reconcile these opinions without relying on data.

Historical data are a more common source of prior information used in regulatory settings. The incremental iteration of the device development process facilitates this. When only small changes are made on a device, and in particular small changes that do not raise substantial questions of change in safety or effectiveness relative to an earlier version, leveraging historical data may be appropriate and beneficial.

The simplest version of using historical information is equivalent to substituting prospective data for historical data. This may provide too much weight to the historical data. Down-weighting or discounting the historical data is seen as a way to address concerns about the relative applicability of the historical data. Early examples of such discounting employed a power prior approach. This method involved raising the prior distribution by a fractional power, which can equivalently map to discounts on a percentage scale (e.g., 50% weighting of the prior data, 25% weighting, etc.). The power prior is selected, or fixed, during study design and the operating characteristics of the trial can then be simulated to ensure appropriate behavior.

One criticism of this approach arises when historical data and prospective data are discrepant, calling into question the appropriateness of borrowing in the first place. This criticism led to the development of dynamic borrowing (in contrast to fixed borrowing like the original formulation of the power prior). Dynamic borrowing allows the weight applied to historical data to vary as a function of how similar or discrepant it is from these historical data. In cases where the historical and prospective data are discrepant, less weight is given to the prior data. Conversely, when the data are congruent, a higher degree of weight is placed on the prior.

It is helpful to think about the tension between fixed and dynamic borrowing as a tension of theory-driven versus data-driven concerns. If we believe fixed borrowing is appropriate for a situation, we are putting a stake in the ground that this belief is well supported on theoretical grounds and discrepancies between historical and prospective data may be largely due to chance. If we prefer dynamic borrowing, we are more willing to allow the data to drive the degree of pooling that is applied to the historical and prospective data. Fixed borrowing may therefore be more useful for mature technologies and study designs where there is a strong a priori foundation for applying an historical data set. Dynamic borrowing may be more amenable to technologies or designs where there is less certainty or more rapid evolution. In these situations, it may be preferred to rely more on data than on theoretical beliefs. Further, prospective data may be preferred when historical data are discrepant, consistent with evolving and maturing technologies.

Bayesian methods also are useful in adaptive designs. In these settings, prior distributions may be used to help develop predictive models that are used to reassess study sample size midtrial, so no historical information is needed. In essence, the accumulating trial data become the historical data that are used to predict and refine inference on the remaining trial data. Again, simulation plays a key role in assessing the false-positive rates of such designs. More generally, so-called “noninformative” priors can be used in many situations where historical data are not available. In these cases, when analogous frequentist methods exists, they often produce approximately similar results.

Big Data

The proliferation of data collection instruments, including internet-enabled devices, wearable sensors, and electronic medical records, coupled with computing advances, is enabling advances in data science. Deep learning is producing rapid technological advances in the areas of video and image processing. Working with big data can be viewed as the ultimate level of data aggregation. However, the full promise of these methods has yet to be realized in translational research, in part because of the massive amounts of data required to support machine learning. The amount of highly relevant data typically gathered in a translational research program pales in comparison to the amount gathered and used for applications like facial recognition or self-driving cars (11). However, for several specific medical applications like diagnostic image analysis, the use of “big data” is expected to lead to massive changes. Large amounts of data provide great advantages but do not completely resolve several statistical issues.

First, methods of big data and machine learning are often focused on detecting associations rather than causal relationships. There is yet no substitute for the power of a randomized trial in assessing causality, although propensity score methods are seen as a way to support causal inference in nonrandomized settings. There are other more complex, evolving methods, such as Bayesian causal networks, that attempt to pull causality fully into an analytical framework, but these methods require strong assumptions that may not be tenable from a clinical or regulatory perspective (12).

Second, large amounts of data can produce statistically significant findings that are practically or clinically irrelevant simply as a function of the large sample size. Methods such as the false-discovery rate for controlling false-positive findings are helpful in such situations. Additional clarity can be provided by ensuring that clinically relevant differences are identified and held up as a criterion for success over strictly statistically based criterion.

The limitations of initial clinical experience with new technologies, coupled with improving health care infrastructure that facilitates collection of valid longer-term data, has raised the value of data collected after an initial regulatory approval. Real-world data collected in post-market studies are recognized as providing enhanced external validity and providing additional insights that are not possible with smaller, shorter-term studies focused on initial characterizations of safety, performance, and effectiveness. Post-market studies are often designed with considerably larger sample sizes then a pre-market study. This allows for statistical detection of much smaller effect sizes that are still of clinical interest, or for the detection of rare events. Attaining these goals is often only practically possible in a post-market study. Tools of data aggregation can be readily applied to post-market data.

All statistical methods should support the end goal of producing safe, effective, and valuable therapies. The use of advanced methods that further the goal should be encouraged. However, good analytical methods are no substitute for a well-designed, well-powered, well-executed prospective study. Such studies should produce robust results that are consistent across a variety of statistical methods. Some of the motivation or preference for one particular statistical method over the other is unfortunately a consequence of misinterpretation of statistics and p values in particular (13). The human desire to simplify things into black-and-white terms becomes at times an impediment to scientific progress. It is important to prespecify study designs, endpoints, and analyses to prevent other biases from creeping into decision making. This tends to lump studies into “failed” or “successful” trials. However, if science is considered a set of methods for better understanding the world and for providing explanations, taking up the challenge and applying hard work (14) and careful thought will further the goals of translational research.

Special Statistical Considerations for Preclinical Bench Testing

Several groups – the International Organization for Standardization (ISO), ASTM (formerly the American Society for Testing and Materials), and the FDA – have developed standard endpoints and protocols to bench test medical devices. The applicability of a particular standard depends on the validity of the standard relative to the intended use of the device.

Standards are often developed in detail so that once the decision has been made that a certain standard is appropriate to follow, few choices remain with regards to subsequent testing. Nonetheless, justification for experimental aspects, including but not limited to the number of products tested and the duration of testing, again depend on the intended use of the device.

Sample sizes are often in practice based on historical precedent. More formal methods of sample size justification are available, though these may be considerably more relaxed than sample size justifications seen later for clinical studies. For example, bench testing may be designed to only detect incidence of certain fundamental design flaws. A study that has a high probability of observing once such flaw would be highly informative. Accordingly, one can make calculations to understand how sample size relates to the probability of occurrence of one or more flaws that exist at a certain underlying rate.

Similarly, bench-testing requirements may be set to determine that a certain design element is sufficiently reliable. The device itself is assumed to have an extremely high reliability, but due to limitations of time and cost, showing a reasonably high reliability may be appropriate. In these cases, if no failures are observed, one may calculate the lower confidence bound of hypothetical reliability for a population of devices. The sample size may be selected to ensure that the lower confidence bound from such a calculation exceeds a defined reliability threshold. Risk analysis may be used to determine the appropriate level of reliability and confidence level one needs for the stage of testing at hand; higher-risk issues with more severe consequences will require a higher level of reliability and confidence while lower-risk issues will require lower levels.

While the idea of leveraging preclinical data for a combined analysis with clinical data is intriguing, formally pooling of preclinical data with clinical data is not usually performed. Rather, pre-clinical data usually stands on its own and is often seen as an “early warning” system for clinical events of high risk, or as an “early OK” that other events are not likely to occur at catastrophic rates in humans. In other cases, preclinical data may be considered sufficiently representative of human experience that the preclinical data themselves may be taken as sufficient evidence. For example, a system for cardiac mapping that does not provide therapy may be sufficiently understood with a combination of observable porcine model data and human data from a commercially available device (15). If future refinements of such a device are made such that no questions are raised regarding the effect on safety or effectiveness, little to no additional animal or human data may be required (16).

References

- Prentice RL. Surrogate endpoints in clinical trials: definition and operational criteria. Stat Med. 1989;8:431-40.

- Berber VW. Does the Prentice criterion validate surrogate endpoints? Stat Med. 2004;23:1571-8.

- O’Brien PC. Procedures for comparing samples with multiple endpoints. 1984;40:1079-87.

- Finkelstein DM, Schoenfeld, DA. Combining mortality and longitudinal measures in clinical trials. Stat Med. 1999;18:1341-54.

- Pocock SJ, Ariti CA, Collier TJ, Wang D. The win ratio: a new approach to the analysis of composite endpoints in clinical trials based on clinical priorities. Eur Heart J. 2012;33:176-82.

- Lamb E. 5 sigma what’s that? Observations, Sci Am. July 17, 2012. Available at https://blogs.scientificamerican.com/observations/five-sigmawhats-that/. Accessed on December 6, 2018.

- Isakov L, Lo A, Montazerhodjat V. Is the FDA too conservative or too aggressive?: A Bayesian decision analysis of clinical trial design. SSRN; published November 28, 2017. Available at: https://ssrn.com/abstract=2641547. Accessed on December 6, 2018.

- National Research Council. The prevention and treatment of missing data in clinical trials. Washington, DC: The National Academies Press, 2010. Available at: https://doi.org/10.17226/12955. Accessed on December 6, 2018.

- S. Food and Drug Administration. Code of Federal Regulations. Title 21, Volume 8. Medical device classification procedures: determination of safety and effectiveness. 21 CFR860.7. Available at: https://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfCFR/CFRSearch.cfm?FR=860.7. Accessed on December 6, 2018.

- De Finetti B. Theory of Probability: A Critical Introductory Treatment. Volume 2. New York, NY: John Wiley & Sons Ltd, 1975.

- Wladawsky-Berger I. Getting on the AI Learning Curve: A Pragmatic Incremental Approach. CIO Journal, Wall Street Journal; published on July 6, 2018. Available at: https://blogs.wsj.com/cio/2018/07/06/getting-on-the-ai-learning-curve-a-pragmatic-incremental-approach/. Accessed on December 6, 2018.

- Pearl J. Causality: Models, Reasoning, and Inference. New York, NY: Cambridge University Press, 2000.

- Wasserstein RL, Lazar NA. The ASA’s statement on p-values: context, process, and purpose. Am Stat. 2016;70:129-133.

- Aschwanden C. Science isn’t broken. FiveThirtyEight; published August 19, 2015. Available at: https://fivethirtyeight.com/features/science-isnt-broken/. Accessed on December 6, 2018.

- S. Food and Drug Administration. Device marketing correspondence: CardioInsight Technologies, Inc. November 19, 2014. Available at: https://www.accessdata.fda.gov/cdrh_docs/pdf14/K140497.pdf. Accessed on December 6, 2018.

- S. Food and Drug Administration. Device marketing correspondence: Medtronic, Inc. November 4, 2016. Available at: https://www.accessdata.fda.gov/cdrh_docs/pdf16/k162440.pdf. Accessed on December 6, 2018.